The Internet of Things (IoT) is all around us. It’s in the digital fabric of a big city, the brain of a modern factory, the way your smart home can be controlled from a tablet, and even the tech telling your fridge it’s time to order a quart of milk.

As these examples show, IoT is fast becoming a must-have. Organizations and individuals alike turn to the IoT to gain greater control and flexibility over the technologies they regularly use. Increasingly, they’re doing it with the intelligent edge.

The intelligent edge moves command and control from the core to the edge, closer to where today’s smart devices and sensors actually are installed. That’s needed because so many IoT devices and connections are now active, with more coming online every day.

Communicating with millions of connected devices via a few centralized data centers is the old way of doing things. The new method is a vast network of local nodes capable of collecting, processing, analyzing, and making decisions from the IoT information as close to its origin as possible.

Controlling IoT

To better understand the relationship between IoT and intelligent edge, let’s look at two use cases: manufacturing and gaming.

Modern auto manufacturers like Tesla and Rivian use IoT to control their industrial robots. Each robot is fitted with multiple sensors and actuators. The sensors report their current position and condition, and the actuators control the robot’s movements.

In this application, the intelligent edge acts as a small data center in or near the factory where the robots work. This way, instead of waiting for data to transfer to a faraway data center, factory managers can use the intelligent edge to quickly capture, analyze and process data—and then act just as quickly.

Acting on that data may include performing preventative or reactive maintenance, adjusting schedules to conserve power, or retasking robots based on product configuration changes.

The benefits of a hyper-localized setup like this can prove invaluable for manufacturers. Using the intelligent edge can save them time, money and person-hours by speeding both analysis and decision-making.

For manufacturers, the intelligent edge can also add new layers of security. That’s because data is significantly more vulnerable when in transit. Cut the distance the data travels and the use of external networks, and you also eliminate many cybercrime threat vectors.

Gaming is another marquee use case for the intelligent edge. Resource-intensive games such as “Fortnite” and “World of Warcraft” demand high-speed access to the data generated by the game itself and a massive online gaming community of players. With speed at such a high premium, waiting for that data to travel to and from the core isn’t an option.

Instead, the intelligent edge lets game providers collect and process data near their players. The closer proximity lowers latency by limiting the distance the data travels. It also improves reliability. The resulting enhanced data flow makes gameplay faster and more responsive.

Tech at the edge

The intelligent edge is sometimes described as a network of localized data centers. That’s true as far as it goes, but it’s not the whole story. In fact, the intelligent edge infrastructure’s size, function and location come with specific technological requirements.

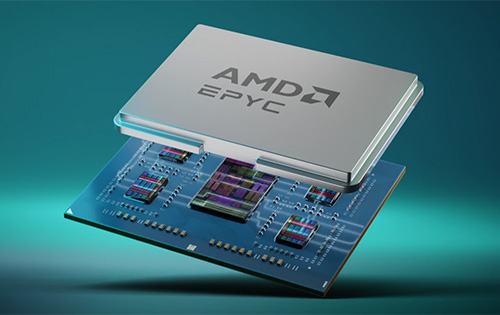

Unlike a traditional data center architecture, the edge is often better served by rugged form factors housing low-cost, high-efficiency components. These components, including the recently released AMD EPYC 8004 Series processors, feature fewer cores, less heat and lower prices.

The AMD EPYC 8004 Series processors share the same 5nm ‘Zen4c’ core complex die (CCD) chiplets and 6nm AMD EPYC I/O Die (IOD) as the more powerful AMD EPYC 9004 Series.

However, the AMD EPYC 8004s offers a more efficiency-minded approach than its data center-focused cousins. Nowhere is this better illustrated than the entry-level AMD EPYC 8042 processor, which provides a scant 8 cores and a thermal design power (TDP) of just 80 watts. AMD says this can potentially save customers thousands of dollars in energy costs over a five-year period.

To deploy the AMD silicon, IT engineers can choose from an array of intelligent edge systems from suppliers, including Supermicro. The selection includes expertly designed form factors for industrial, intelligent retail and smart-city deployments.

High-performance rack mount servers like the Supermicro H13 WIO are designed for enterprise-edge deployments that require data-center-class performance. The capacity to house multiple GPUs and other hardware accelerators makes the Supermicro H13 an excellent choice for deploying AI and machine learning applications at the edge.

The future of the edge

The intelligent edge is another link in a chain of data capture and analysis that gets longer every day. As more individuals and organizations deploy IoT-based solutions, an intelligent edge infrastructure helps them store and mine that information faster and more efficiently.

The insights provided by an intelligent edge can help us improve medical diagnoses, better control equipment, and more accurately predict human behavior.

As the intelligent edge architecture advances, more businesses will be able to deploy solutions that enable them to cut costs and improve customer satisfaction simultaneously. That kind of deal makes the journey to the edge worthwhile.

Part 1 of this two-part blog series on the intelligent edge looked at the broad strokes of this emerging technology and how organizations use it to increase efficiency and reliability. Read Part 1 now.

Do more: