Artificial intelligence is being adapted for specific industry verticals. That now includes retail.

Supermicro, AMD and Wobot Intelligence Inc., a video intelligence supplier, are partnering to provide retailers with a short-depth server they can use to drive AI-powered analysis of their in-store videos. With these analyses, retailers can improve store operations, elevate the customer experience and boost sales.

The new server system was recently showcased by the three partners at NRF Europe 2025, an international conference for retailers. This year’s NRF Europe was held in Paris, France, in mid-September.

The new retail system is based on a Supermicro 1U server, model AS -1115S-FWTRT. It’s a short-depth front I/O system powered by a single AMD EPYC 8004 processor.

The server’s other features include dual 10G ports, dual 2.5-inch drive bays, up to 768GB of DDR5 memory, and an 800W redundant platinum power supply. This server is air-cooled by as many as six heavy-duty fans, and it supports a pair of single-width GPUs.

Good to Go

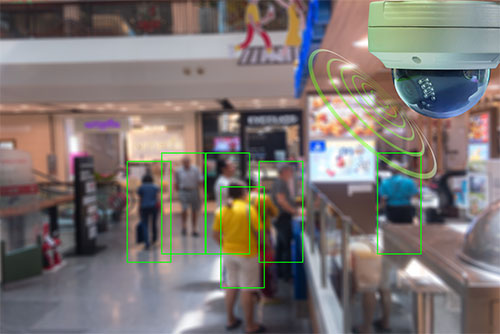

The retail system’s video-analysis software, provided by Wobot.ai, features a single dashboard, performance benchmarking, and easy installation and configuration. It’s designed to work with a user’s existing CCTV setup.

The company’s WoConnect app helps users connect digital video recorders (DVRs) and network video recorders (NVRs) in their private network to their Wobot.ai account. The app routes the user’s camera feeds to the AI.

Target use cases for retailers include store operations, loss prevention and compliance, customer behavior and footfall analysis.

More specifically, retailers can use the system to conduct video analyses that include:

- Zone-based analytics: Which areas of the store draw the most attention? Which products draw interaction? How do customers move through the store?

- Heat maps and event tracking: Visualize “crowd magnets” to improve future sales.

- Customer-path analysis: Observe which sections of the store customers explore the most, and also see where they linger.

Using the system, retailers can enjoy a long list of benefits that include accelerated checkout processes, fewer customer walkaways, fine-tuned staffing levels, and improved product placement.

For example, a chain of juice bars with nearly 145 locations in California turned to Wobot.ai for help speeding customer service and improving employee productivity. Based on its video analyses, the retailer worked with Wobot.ai to design a pilot program for 10 stores. In just three months, the pilot delivered additional revenue in the test stores equivalent to 2% to 2.5% a year.

Wobot.ai also offers its video intelligence systems to other verticals, including hospitality, food service and security.

Edgy

One important feature of the new server is that it allows retailers to run real-time AI-powered video analysis at the edge. The Supermicro server is housed in a short-depth form factor, meaning it can be run in retail sites that lack a full-fledged data center.

Similarly, the system’s AMD EPYC 8004 processor has been optimized for power efficiency—important for installations at the edge. Featuring up to 64 ‘Zen4c’ dense cores, this AMD processor is specifically designed for intelligent edge and communications workloads.

By processing the AI analysis on-premises, the new system also offers low latency and high levels of privacy. Wobot.ai says its software is scalable across literally thousands of locations.

And the software is designed to be integrated easily with retailers’ existing camera infrastructure. In this way, it offers fast time-to-value and a quick return on investment.

Do you have retail customers looking for an edge—with AI at the edge? Tell them about this new retail solution today.

Do More:

- Meet Wobot.ai

- Check out the Supermicro 1U short-depth server

- Explore AMD EPYC 8004 Series processors

- Learn more about short-depth servers

- Attend a “Meet the Experts” webinar with Wobot.ai’s CEO and others on Oct. 23, 2025: Fast Everywhere: How Wobot’s multi-modal AI transforms drive-thru and in-store experiences