Into storage? Then learn about the latest storage innovations at the Supermicro Open Storage Summit ’24. It’s an online event happening over three weeks, August 13 – 29. And it’s free to attend.

The theme of this year’s summit is “enabling software-defined storage from enterprise to AI.” Sessions are aimed at anyone involved with data storage, whether you’re a CIO, IT support professional, or anything in between.

The Supermicro Open Storage Summit ’24 will bring together executives and technical experts from the entire software-defined storage ecosystem. They’ll talk about the latest developments enabling storage solutions.

Each session will feature Supermicro product experts along with leaders from both hardware and software suppliers. Together, these companies give a boost to the software-defined storage solution ecosystem.

Seven Sessions

This year’s Open Storage Summit will feature seven sessions. They’ll cover topics and use cases that include storage for AI, CXL, storage architectures and much more.

Hosting and moderating duties will be filled by Rob Strechay, managing director and principal analyst at theCUBE Research. His company provides IT leaders with competitive intelligence, market analysis and trend tracking.

All the Storage Summit sessions will start at 10 a.m. PDT / 1 p.m. EDT and run for 45 minutes. All sessions will also be available for on-demand viewing later. But by attending a live session, you’ll be able to participate in the X-powered Q&A with the speakers.

What’s On Tap

What can you expect? To give you an idea, here are a few of the scheduled sessions:

Aug. 14: AI and the Future of Media Storage Workflows: Innovations for the Entertainment Industry

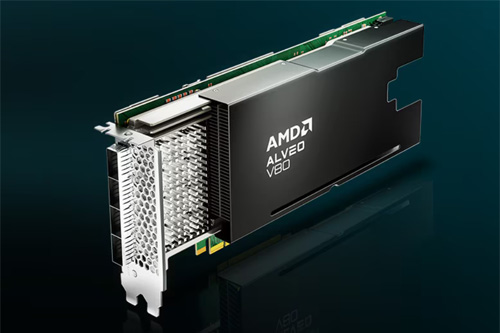

Whether it’s movies, TV, or corporate videos, the post-production process including editing, special effects, coloring, and distribution requires both high-performance and large-capacity solutions. In this session, Supermicro, Quantum, AMD and Western Digital will discuss how primary and secondary storage is optimized for post-production workflows.

Aug. 20: Hyperscale AI: Secure Data Services for CSPs

Cloud services providers must seamlessly scale their AI operations across thousands of GPUs, while ensuring industry-leading reliability, security, and compliance capabilities. Speakers from Supermicro, AMD, VAST Data, and Solidigm will explain how CSPs can deploy AI models at an unprecedented scale with confidence and security.

There’s a whole lot more, too. Learn more about the Supermicro Open Storage Summit ’24 and register to attend now.