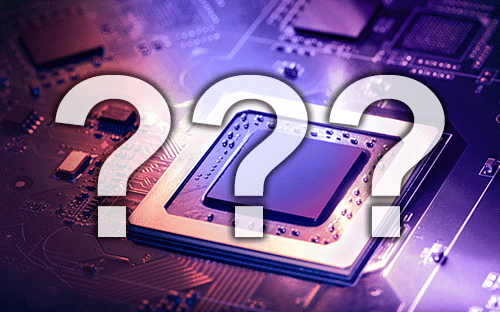

The AMD Instinct MI300A APU, set to ship in this year’s second half, combines the compute power of a CPU with the capabilities of a GPU. Your data-center customers should be interested if they run high-performance computing (HPC) or AI workloads.

More specifically, the AMD Instinct MI300A is an integrated data-center accelerator that combines AMD Zen 4 cores, AMD CDNA3 GPUs and high-bandwidth memory (HBM) chiplets. In all, it has more than 146 billion transistors.

This AMD component uses 3D die stacking to enable extremely high bandwidth among its parts. In fact, nine 5nm chiplets that are 3D-stacked on top of four 6nm chiplets with significant HBM surrounding it.

And it’s coming soon. The AMD Instinct MI300A is currently in AMD’s labs. It will soon be sampled with customers. And AMD says it’s scheduled for shipments in the second half of this year.

‘Most complex chip’

The AMD Instinct MI300A was publicly displayed for the first time earlier this year, when AMD CEO Lisa Su held up a sample of the component during her CES 2023 keynote. “This is actually the most complex chip we’ve ever built,” Su told the audience.

A few tech blogs have gotten their hands on early samples. One of them, Tom’s Hardware, was impressed by the “incredible data throughput” among the Instinct MI300A’s CPU, GPU and memory dies.

The Tom’s Hardware reviewer added that will let the CPU and GPU work on the same data in memory simultaneously, saving power, boosting performance and simplifying programming.

Another blogger, Karl Freund, a former AMD engineer who now works as a market researcher, wrote in a recent Forbes blog post that the Instinct MI300 is a “monster device” (in a good way). He also congratulated AMD for “leading the entire industry in embracing chiplet-based architectures.”

Previous generation

The new AMD accelerator builds on a previous generation, the AMD Instinct MI200 Series. It’s now used in a variety of systems, including Supermicro’s A+ Server 4124GQ-TNMI. This completely assembled system supports the AMD Instinct MI250 OAM (OCP Acceleration Module) accelerator and AMD Infinity Fabric technology.

The AMD Instinct MI200 accelerators are designed with the company’s 2nd gen AMD CDNA Architecture, which encompasses the AMD Infinity Architecture and Infinity Fabric. Together, they offer an advanced platform for tightly connected GPU systems, empowering workloads to share data fast and efficiently.

The MI200 series offers P2P connectivity with up to 8 intelligent 3rd Gen AMD Infinity Fabric Links with up to 800 GB/sec. of peak total theoretical I/O bandwidth. That’s 2.4x the GPU P2P theoretical bandwidth of the previous generation.

Supercomputing power

The same kind of performance now available to commercial users of the AMD-Supermicro system is also being applied to scientific supercomputers.

The AMD Instinct MI25X accelerator is now used in the Frontier supercomputer built by the U.S. Dept. of Energy. That system’s peak performance is rated at 1.6 exaflops—or over a billion billion floating-point operations per second.

The AMD Instinct MI250X accelerator provides Frontier with flexible, high-performance compute engines, high-bandwidth memory, and scalable fabric and communications technologies.

Looking ahead, the AMD Instinct MI300A APU will be used in Frontier’s successor, known as El Capitan. Scheduled for installation late this year, this supercomputer is expected to deliver at least 2 exaflops of peak performance.