The humble network interface card (NIC) is getting a status boost from AI.

At a fundamental level, the NIC enables one computing device to communicate with others across a network. That network could be a rendering farm run by a small multimedia production house, an enterprise-level data center, or a global network like the internet.

From smartphones to supercomputers, most modern devices use a NIC for this purpose. On laptops, phones and other mobile devices, the NIC typically connects via a wireless antenna. For servers in enterprise data centers, it’s more common to connect the hardware infrastructure with Ethernet cables.

Each NIC—or NIC port, in the case of an enterprise NIC—has its own media access control (MAC) address. This unique identifier enables the NIC to send and receive relevant packets. Each packet, in turn, is a small chunk of a much larger data set, enabling it to move at high speeds.

Networking for the Enterprise

At the enterprise level, everything needs to be highly capable and powerful, and the NIC is no exception. Organizations operating full-scale data centers rely on NICs to do far more than just send emails and sniff packets (the term used to describe how a NIC “watches” a data stream, collecting only the data addressed to its MAC address).

Today’s NICs are also designed to handle complex networking tasks onboard, relieving the host CPU so it can work more efficiently. This process, known as smart offloading, relies on several functions:

- TCP segmentation offloading: This breaks big data into small packets.

- Checksum offloading: Here, the NIC independently checks for errors in the data.

- Receive side scaling: This helps balance network traffic across multiple processor cores, preventing them from getting bogged down.

- Remote Direct Memory Access (RDMA): This process bypasses the CPU and sends data directly to GPU memory.

Important as these capabilities are, they become even more vital when dealing with AI and machine learning (ML) workloads. By taking pressure off the CPU, modern NICs enable the rest of the system to focus on running these advanced applications and processing their scads of data.

This symbiotic relationship also helps lower a server’s operating temperature and reduce its power usage. The NIC does this by increasing efficiency throughout the system, especially when it comes to the CPU.

Enter the AI NIC

Countless organizations both big and small are clamoring to stake their claims in the AI era. Some are creating entirely new AI and ML applications; others are using the latest AI tools to develop new products that better serve their customers.

Either way, these organizations must deal with the challenges now facing traditional Ethernet networks in AI clusters. Remember, Ethernet was invented over 50 years ago.

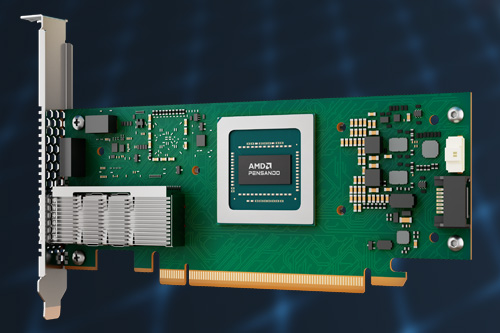

AMD has a solution: a revolutionary NIC it has created for AI workloads, the AMD AI NIC card. Recently released, this NIC card is designed to provide the intense communication capabilities demanded by AI and ML models. That includes tightly coupled parallel processing, rapid data transfers and low-latency communications.

AMD says its AI NIC offers a significant advancement in addressing the issues IT managers face as they attempt to reconcile the broad compatibility of an aging network technology with modern AI workloads. It’s a specialized network accelerator explicitly designed to optimize data transfer within back-end AI networks for GPU-to-GPU communication.

To address the challenges of AI workloads, what’s needed is a network that can support distributed computing over multiple GPU nodes with low jitter and RDMA. The AMD AI NIC is designed to manage the unique communication patterns of AI workloads and offer high throughput across all available links. It also offers congestion avoidance, reduced tail latency, scalable performance, and fast job-completion times.

Validated NIC

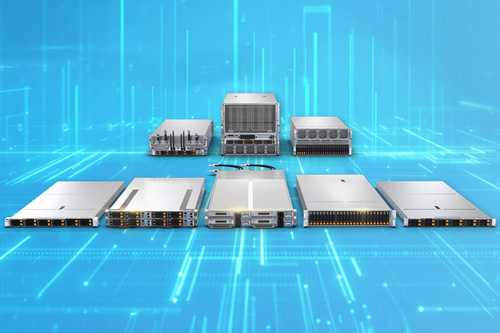

Following rigorous validation by the engineers at Supermicro, the AMD AI NIC is now supported on the Supermicro 8U GPU Server (AS -8126GS-TNMR). This behemoth is designed specifically for AI, deep learning, high-performance computing (HPC), industrial automation, retail and climate modeling.

In this configuration, AMD’s smart AI-focused NIC can offload networking tasks. This lets the Supermicro SuperServer’s dual AMD EPYC 9000-series processors run at even higher efficiency.

In the Supermicro server, the new AMD AI NIC occupies one of the myriad PCI Express x16 slots. Other optional high-performance PCIe cards include a CPU-to-GPU interconnect and up to eight AMD Instinct GPU accelerators.

In the NIC of time

A chain is only as strong as its weakest link. The chain that connects our ever-expanding global network of AI operations is strengthened by the advent of NICs focused on AI.

As NICs grow more powerful, these advanced network interface cards will help fuel the expansion of the AI/ML applications that power our homes, offices, and everything in between. They’ll also help us bypass communication bottlenecks and speed time to market.

For SMBs and enterprises alike, that’s good news indeed.

Do More:

- View: AMD AI NIC product brief.

- Check specs: Supermicro 8U GPU SuperServer.

- Read: Transforming AI networks with AMD Pensando Pollara 400 (AMD blog post).