Increasingly resource-intensive AI workloads are creating more demand for advanced data center cooling systems. Today, the most efficient and cost-effective method is liquid cooling.

A liquid-cooled PC or server relies on a liquid rather than air to remove heat from vital components that include CPUs, GPUs and AI accelerators. The heat produced by these components is transferred to a liquid. Then the liquid carries away the heat to where it can be safely dissipated.

Most computers don’t require liquid cooling. That’s because general-use consumer and business machines don’t generate enough heat to justify liquid cooling’s higher upfront costs and additional maintenance.

However, high-performance systems designed for tasks such as gaming, scientific research and AI can often operate better, longer and more efficiently when equipped with liquid cooling.

How Liquid Cooling Works

For the actual coolant, most liquid systems use either water or dielectric fluids. Before water is added to a liquid cooler, it’s demineralized to prevent corrosion and build-up. And to prevent freezing and bacterial growth, the water may also be mixed with a combination of glycol, corrosion inhibitors and biocides.

Thus treated, the coolant is pushed through the system by an electric pump. A single liquid-cooled PC or server will need to include its own pump. But for enterprise data center racks containing multiple servers, the liquid is pumped by what’s known as an in-rack cooling distribution unit (CDU). Then the liquid is distributed to each server via a coolant distribution manifold (CDM).

As the liquid flows through the system, it’s channeled into cold plates that are mounted atop the system’s CPUs, GPUs, DIMM modules, PCIe switches and other heat-producing components. Each cold plate has microchannels through which the liquid flows, absorbing and carrying away each component’s thermal energy.

The next step is to safely dissipate the collected heat. To accomplish this, the liquid is pumped back through the CDU, which sends the now-hot liquid to a mechanism that removes the heat. This is typically done using chillers, cooling towers or heat exchangers.

Finally, the cooled liquid is sent back to the systems’ heat-producing components to begin the process again.

Liquid Pros & Cons

The most compelling aspect of liquid cooling is its efficiency. Water moves heat up to 25 times better than air while using less energy to do it. In comparison with traditional air, liquid cooling can reduce cooling energy costs by up to 40%.

But there’s more to the efficiency of liquid cooling than just cutting costs. Liquid cooling also enables IT managers to move servers closer together, packing in more power and storage per square foot. Given the high cost of data center real estate, and the fullness of many data centers, that’s an important benefit.

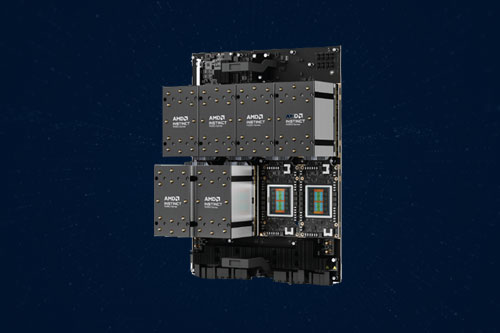

In addition, liquid cooling can better handle the latest high-powered processing components. For instance, Supermicro says its DLC-2 next-generation Direct Liquid-Cooling solutions, introduced in May, can accommodate warmer liquid inflow temperatures while also enhancing AI per watt.

But liquid cooling systems have their downsides, too. For one, higher upfront costs can present a barrier for entry. Sure, data center operators will realize a lower total cost of ownership (TCO) over the long run. But when deploying a liquid-cooled data center, they must still contend with initial capital expense (CapEx) outlays—and justifying those costs to the CFO.

For another, IT managers might think twice about the additional complexity and risks of a liquid cooling solution. More components and variables mean more things that can go wrong. Data center insurance premiums may rise too, since a liquid cooling system can always spring a leak.

Driving Demand: AI

All that said, the market for liquid cooling systems is primed for serious growth.

As AI workloads become increasingly resource-intensive, IT managers are deploying more powerful servers to keep up with demand. These high-performance machines produce more heat than previous generations. And that creates increased demand for efficient, cost-effective cooling solutions.

How much demand? This year, the data center liquid cooling market is projected to drive global sales of $2.84 billion, according to Markets and Markets.

Looking ahead, the industry watcher expects the global liquid cooling market to reach $21.14 billion by 2032. If that happens, the rise will represent a compound annual growth rate (CAGR) over the projected period of 33%.

Coming Soon: Immersive Cooling

In the near future, AI workloads will likely become even more demanding. This means data centers will need to deploy—and cool—ultra-dense AI server clusters that produce tremendous amounts of heat.

To deal with this extra heat, IT managers may need the next step in data center cooling: immersion.

With immersion cooling, an entire rack of servers is submerged horizontally in a tank filled with what’s known as dielectric fluid. This is a non-conductive liquid that ensures the server’s hardware can operate while submerged, and without short-circuiting.

Immersion cooling is being developed along two paths. The most common variety is called single-phase, and it operates similarly to an aquarium’s water filter. As pumps circulate the dielectric fluid around the servers, the fluid is heated by the server’s components. Then it’s cooled by an external heat exchanger.

The other type of immersion cooling is known as two-phase. Here, the system uses water treated to have a relatively low boiling point—around 50 C / 122 F. As this water is heated by the immersed server, it boils, creating a vapor that rises to condensers installed at the top of the tank. The vapor is there condensed to a cooler liquid, then allowed to drip back down into the tank.

This natural convection means there’s no need for electric pumps. It’s a glimpse of a smarter, more efficient liquid future, coming soon to a data center near you.

Do More: