One of the challenges of building machine learning (ML) models is managing data. Your infrastructure must be able to process very large data sets rapidly as well as ingest both structured and unstructured data from a wide variety of sources.

That kind of data is typically generated in performance-intensive computing areas like GPU-accelerated applications, structural biology and digital simulations. Such applications typically have three problems: how to efficiently fill a data pipeline, how to easily integrate data across systems and how to manage rapid changes in data storage requirements. That’s where Weka.io comes into play, providing higher-speed data ingestion and avoiding unnecessary copies of your data while making it available across the entire ML modeling space.

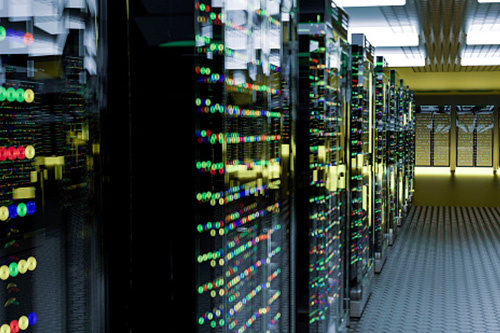

Weka’s file system, WekaFS, has been developed just for this purpose. It unifies your entire data lake into a shared global namespace where you can more easily access and manage trillions of files stored in multiple locations from one directory. It works across both on-premises and cloud storage repositories and is optimized for cloud-intensive storage so that it will provide the lowest possible network latencies and highest performance.

This next-generation data storage file system has several other advantages: it is easy to deploy, entirely software-based, plus it is a storage solution that provides all-flash level performance, NAS simplicity and manageability, cloud scalability and breakthrough economics. It was designed to run on any standard x86-based server hardware and commodity SSDs or run natively in the public cloud, such as AWS.

Weka’s file system is designed to scale to hundreds of petabytes, thousands of compute instances and billions of files. Read and write latency for file operations against active data is as low as 200 microseconds in some instances.

Supermicro has produced its own NVMe Reference Architecture that supports WekaFS on some of its servers, including the Supermicro A+ AS-1114S-WN10RT and AS-2114S-WN24RT using the AMD EPYC™ 7402P processors with at least 2TB of memory, expandable to 4TB. Both servers support hot-swappable NVMe storage modules for ultimate performance. Also check out the Supermicro WekaFS A/I and HPC Solution Bundle.