There’s a new version of AMD ROCm, the open software stack designed to enable GPU programming from low-level kernel all the way up to end-user applications.

The latest version, ROCm 6.3, adds features that include expanded operating system support, an open-source toolkit and more.

Rock On

AMD ROCm provides the tools for HIP (the heterogeneous-computing interface for portability), OpenCL and OpenMP. These include compilers, APIs, libraries for high-level functions, debuggers, profilers and runtimes.

ROCm is optimized for Generative AI and HPC applications, and it’s easy to migrate existing code into. Developers can use ROCm to fine-tune workloads, while partners and OEMs can integrate seamlessly with AMD to create innovative solutions.

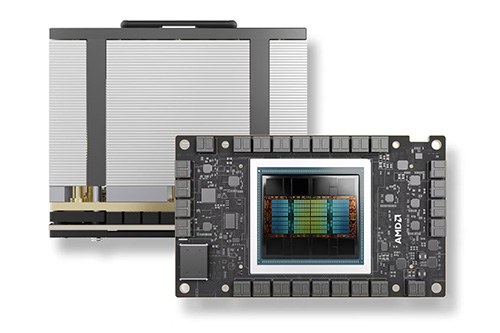

The latest release builds on ROCm 6, which AMD introduced last year. Version 6 added expanded support for AMD Instinct MI300A and MI300X accelerators, key AI support features, optimized performance, and an expanded support ecosystem.

The senior VP of AMD’s AI group, Vamsi Boppana, wrote in a recent blog post: “Our vision is for AMD ROCm to be the industry’s premier open AI stack, enabling choice and rapid innovation.”

New Features

Here’s some of what’s new in AMD ROCm 6.3:

- rocJPEG: A high-performance JPEG decode SDK for AMD GPUs.

- ROCm compute profiler and system profiler: Previously known as Omniperf and Omnitrace, these have been renamed to reflect their new direction as part of the ROCm software stack.

- Shark AI toolkit: This open-source toolkit is for high-performance serving of GenAI and LLMs. Initial release includes support for the AMD Instinct MI300.

- PyTorch 2.4 support: PyTorch is a machine learning library used for applications such as computer vision and natural language processing. Originally developed by Meta AI, it’s now part of the Linux Foundation umbrella.

- Expanded OS support: This includes added support for Ubuntu 24.04.2 and 22.04.5; RHEL 9.5; and Oracle Linux 8.10. In addition, ROCm 6.3.1 includes support for both Debian 12 and the AMD Instinct MI325X accelerator.

- Documentation updates: ROCm 6.3 offers clearer, more comprehensive guidance for a wider variety of use cases and user needs.

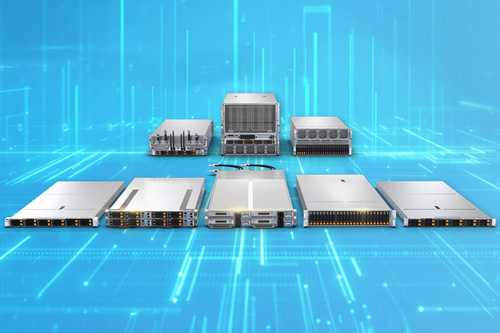

Super for Supermicro

Developers can use ROCm 6.3 to create tune workloads and create solutions for Supermicro GPU systems based on AMD Instinct MI300 accelerators.

Supermicro offers three such systems:

- An 8U 8-GPU system for massive-scale AI training and inference

- A liquid-cooled 2U quad-APU system for enterprise HPC and supercomputing

- An air-cooled 4U quad-APU system for converged HPC and scientific computing

Are your customers building AI and HPC systems? Then tell them about the new features offered by AMD ROCm 6.3.

Do More:

- Join the AMD ROCm community

- Explore AMD ROCm

- Read the AMD ROCm 6 product brief

- Get release notes for AMD ROCm 6.3.0 and ROCm 6.3.1

- Meet the AMD Instinct accelerators