Sales of artificial intelligence platform software show no sign of a slowdown. The road to true Generative AI disruption could be bumpy. And PCs with built-in AI capabilities are starting to sell.

That’s some of the latest AI insights from leading market researchers, analysts and pollsters. And here’s your research roundup.

AI Platforms Maintain Momentum

Is the excitement around AI overblown? Not at all, says market watcher IDC.

“The AI platforms market shows no sign of slowing down,” says IDC VP Ritu Jyoti.

IDC now believes that the market for AI platform software will maintain its momentum through at least 2028.

By that year, IDC expects, worldwide revenue for AI software will reach $153 billion. If so, that would mark a five-year compound annual growth rate (CAGR) of nearly 41%.

The market really got underway last year. That’s when worldwide AI platform software revenue hit $27.9 billion, an annual increase of 44%, IDC says.

Since then, lots of progress has been made. Fully half the organizations now deploying GenAI in production have already selected an AI platform. And IDC says most of the rest will do so in the next six months.

All that has AI software suppliers looking pretty smart.

Mixed Signals on GenAI

There’s no question that GenAI is having a huge impact. The question is how difficult it will be for GenAI-using organizations to achieve their desired results.

GenAI use is already widespread. In a global survey conducted earlier this year by management consultants McKinsey & Co., 65% of respondents said they use GenAI on a regular basis.

That was nearly double the percentage from McKinsey’s previous survey, conducted just 10 months earlier.

Also, three quarters of McKinsey’s respondents said they expect GenAI will lead their industries to significant or disruptive changes.

However, the road to GenAI could be bumpy. Separately, researchers at Gartner are predicting that by the end of 2025, at least 30% of all GenAI projects will be abandoned after their proof-of-concept (PoC).

The reason? Gartner points to several factors: poor data quality, inadequate risk controls, unclear business value, and escalating costs.

“Executives are impatient to see returns on GenAI investments,” says Gartner VP Rita Sallam. “Yet organizations are struggling to prove and realize value.”

One big challenge: Many organizations investing in GenAI want productivity enhancements. But as Gartner points out, those gains can be difficult to quantify.

Further, implementing GenAI is far from cheap. Gartner’s research finds that a typical GenAI deployment costs anywhere from $5 million to $20 million.

That wide range of costs is due to several factors. These include the use cases involved, the deployment approaches used, and whether an organization seeks to be a market disruptor.

Clearly, an intelligent approach to GenAI can be a money-saver.

PCs with AI? Yes, Please

Leading PC makers hope to boost their hardware sales by offering new, built-in AI capabilities. It seems to be working.

In the second quarter of this year, 8.8 million PCs—that’s 14% of all shipped globally in the quarter—were AI-capable, says market analysts Canalys.

Canalys defines “AI-capable” pretty simply: It’s any desktop or notebook system that includes a chipset or block for one or more dedicated AI workloads.

By operating system, nearly 40% of the AI-capable PC shipped in Q2 were Windows systems, 60% were Apple macOS systems, and just 1% ran ChromeOS, Canalys says.

For the full year 2024, Canalys expects some 44 million AI-capable PCs to be shipped worldwide. In 2025, the market watcher predicts, these shipments should more than double, rising to 103 million units worldwide. There's nothing artificial about that boost.

Do more:

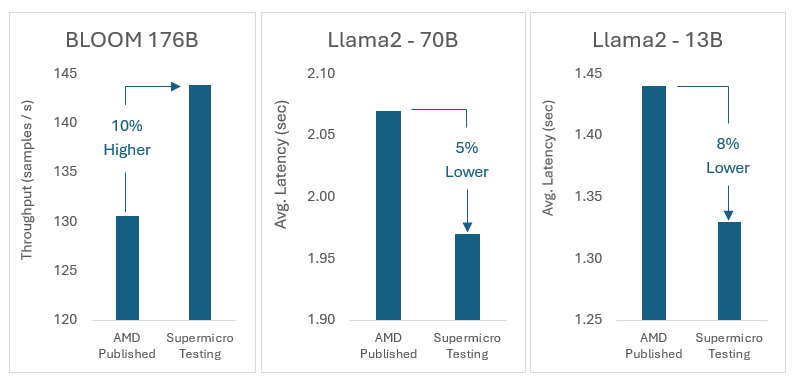

- Explore AMD’s AI solutions

- Look into Supermicro’s AI infrastructure solutions