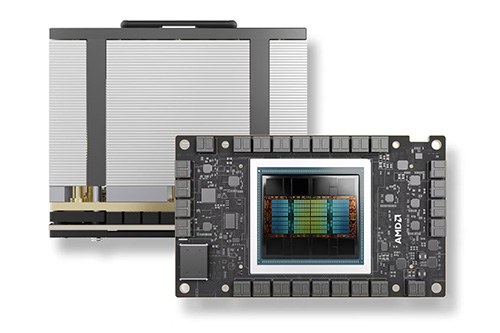

The AMD Instinct MI300A is the world’s first data center accelerated processing unit for high-performance computing and AI. It does this by integrating both CPU and GPU cores on a single package.

That makes the AMD Instinct MI300A highly efficient at running both HPC and AI workloads. It also makes the MI300A powerful enough to accelerate training the latest AI models.

Introduced about a year ago, the AMD Instinct MI300A accelerator is shipping soon. So are two Supermicro servers—one a liquid-cooled 2U system, the other an air-cooled 4U—each powered by four MI300A units.

Under the Hood

The technology of the AMD Instinct MI300A is impressive. Each MI300A integrates 24 AMD ‘Zen 4’ x86 CPU cores with 228 AMD CDNA 3 high-throughput GPU compute units.

You also get 128GB of unified HBM3 memory. This presents a single shared address space to CPU and GPU, all of which are interconnected into the coherent 4th Gen AMD Infinity architecture.

Also, the AMD Instinct MI300A is designed to be used in a multi-unit configuration. This means you can connect up to four of them in a single server.

To make this work, each APU has 1 TB/sec. of bidirectional connectivity through eight 128 GB/sec. AMD Infinity Fabric interfaces. Four of the interfaces are dedicated Infinity Fabric links. The other four can be flexibly assigned to deliver either Infinity Fabric or PCIe Gen 5 connectivity.

In a typical four-APU configuration, six interfaces are dedicated to inter-GPU Infinity Fabric connectivity. That supplies a total of 384 GB/sec. of peer-to-peer connectivity per APU. One interface is assigned to support x16 PCIe Gen 5 connectivity to external I/O devices. In addition, each MI300A includes two x4 interfaces to storage, such as M.2 boot drives, plus two USB Gen 2 or 3 interfaces.

Converged Computing

There’s more. The AMD Instinct MI300A was designed to handle today’s convergence of HPC and AI applications at scale.

To meet the increasing demands of AI applications, the APU is optimized for widely used data types. These include FP64, FP32, FP16, BF16, TF32, FP8 and INT8.

The MI300A also supports native hardware sparsity for efficiently gathering data from sparse matrices. This saves power and compute cycles, and it also lowers memory use.

Another element of the design aims at high efficiency by eliminating time-consuming data copy operations. The MI300A can easily offload tasks easily between the CPU and GPU. And it’s all supported by AMD’s ROCm 6 open software platform, built for HPC, AI and machine learning workloads.

Finally, virtualized environments are supported on the MI300A through SR-IOV to share resources with up to three partitions per APU. SR-IOV—short for single-root, input/output virtualization—is an extension of the PCIe spec. It allows a device to separate access to its resources among various PCIe functions. The goal: improved manageability and performance.

Fun fact: The AMD Instinct MI300A is a key design component of the El Capitan supercomputer recently dedicated by Lawrence Livermore Labs. This system can process over two quintillion (1018) calculations per second.

Supermicro Servers

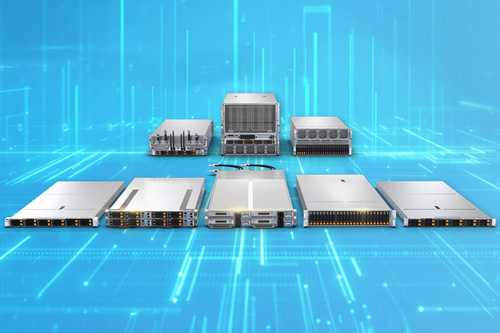

As mentioned above, Supermicro now offers two server systems based on the AMD Instinct MI300A APU. They’re 2U and 4U systems.

These servers both take advantage of AMD’s integration features by combining four MI300A units in a single system. That gives you a total of 912 GPUs, 96 CPUs, and 512GB of HBM3 memory.

Supermicro says these systems can push HPC processing to Exascale levels, meaning they’re very, very fast. “Flop” is short for floating point operations per second, and “exa” indicates a 1 with 18 zeros after it. That’s fast.

Supermicro’s 2U server (model number AS -2145GH-TNMR-LCC) is liquid-cooled and aimed at HPC workloads. Supermicro says direct-to-chip liquid-cooling technology enables a nice TCO with over 51% data center energy cost savings. The company also cites a 70% reduction in fan power usage, compared with air-cooled solutions.

If you’re looking for big HPC horsepower, Supermicro’s got your back with this 2U system. The company’s rack-scale integration is optimized with dual AIOM (advanced I/O modules) and 400G networking. This means you can create a high-density supercomputing cluster with as many as 21 of Supermicro’s 2U systems in a 48U rack. With each system combining four MI300A units, that would give you a total of 84 APUs.

The other Supermicro server (model number AS -4145GH-TNMR) is an air-cooled 4U system, also equipped with four AMD Instinct MI300A accelerators, and it’s intended for converged HPC-AI workloads. The system’s mechanical airflow design keeps thermal throttling at bay; if that’s not enough, the system also has 10 heavy-duty 80mm fans.

Do More:

- Learn more: AMD Instinct MI300A

- Download a data sheet: AMD Instinct MI300

- CPU and GPU for AI? Learn why from the recent Tech Explainer

- Read an AMD ROCm blog post: MI300A — Exploring the APU advantage