SVA System Vertrieb Alexander GmbH, better known as SVA, is among the leading IT system integrators of Germany. Headquartered in Wiesbaden, the company employs more than 2,700 people in 27 branch offices. SVA’s customers include organizations in automotive, financial services and healthcare.

To learn more about how SVA works jointly with Supermicro and AMD on advanced technologies, PIC managing editor Peter Krass spoke recently with Bernhard Homoelle, head of SVA’s high performance computing (HPC) competence center (pictured above). Their interview has been lightly edited.

For readers outside of Germany, please tell us about SVA?

First of all, SVA is an owner-operated system integrator. We offer high-quality products, we sell infrastructure, we support certain types of implementations, and we offer operational support to help our customers achieve optimum solutions.

We work with partners to figure out what might be the best solution for our customers, rather than just picking one vendor and trying to convince the customer they should use them. Instead, we figure out what is really needed. Then we go in the direction where the customer can really have their requirements met. The result is a good relationship with the customer, even after a particular deal has been closed.

Does SVA focus on specific industries?

While we do support almost all the big industries—automotive, transportation, public sector, healthcare and more—we are not restricted to any specific vertical. Our main business is helping customers solve their daily IT problems, deal with the complexity of new IT systems, and implement new things like AI and even quantum computing. So we’re open to new solutions. We also offer training with some of our partners.

Germany has a robust auto industry. How do you work with these clients?

In general, they need huge HPC clusters and machine learning. For example, autonomous driving demands not only more computing power, but also more storage. We’re talking about petabytes of data, rather than terabytes. And this huge amount of data needs to be stored somewhere and finally processed. That puts pressure on the infrastructure—not just on storage, but also on the network infrastructure as well as on the compute side. For their way into cloud, some these customers are saying, “Okay, offer me HPC as a Service.”

How do you work with AMD and Supermicro?

It’s a really good relationship. We like working with them because Supermicro has all these various types of servers for individual needs. Customers are different, and therefore they have their own requirements. Figuring out what might be the best server for them is difficult if you have limited types of servers available. But with Supermicro, you can get what you have in mind. You don’t have to look for special implementations because they have these already at hand.

We’re also partnering with AMD, and we have access to their benchmark labs, so we can get very helpful information. We start with discussions with the customer to figure out their needs. Typically, we pick up an application from the customer and then use it as a kind of benchmark. Next, we put it on a cluster with different memory, different CPUs, and look for the best solution in terms of performance for their particular application. Based on the findings, we can recommend a specific CPU, number of cores, memory type and size, and more.

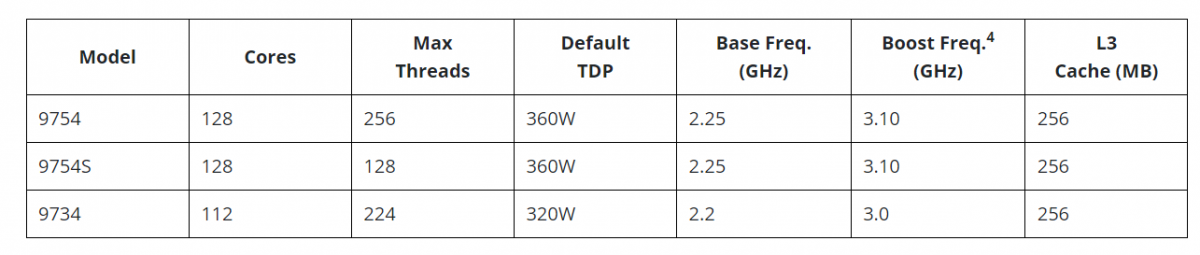

With HPC applications, core memory bandwidth is almost as important as the number of cores. AMD’s new Genoa-X processors should help to overcome some of these limitations. And looking ahead, I’m keen to see what AMD will offer with the Instinct MI300.

Are there special customer challenges you’re solving with Supermicro and AMD solutions?

With HPC workloads, our academic customers say, “This is the amount of money available, so how many servers can you really give us for this budget?” Supermicro and AMD really help here with reasonable prices. They’re a good choice for price/performance.

With AI and machine learning, the real issue is software tools. It really depends what kinds of models you can use and how easy it is to use the hardware with those models.

This discussion is not easy, because for many of our customers today, AI means Nvidia. But I really recommend alternatives, and AMD is bringing some alternatives that are great. They offer a fast time to solution, but they also need to be easy to switch to.

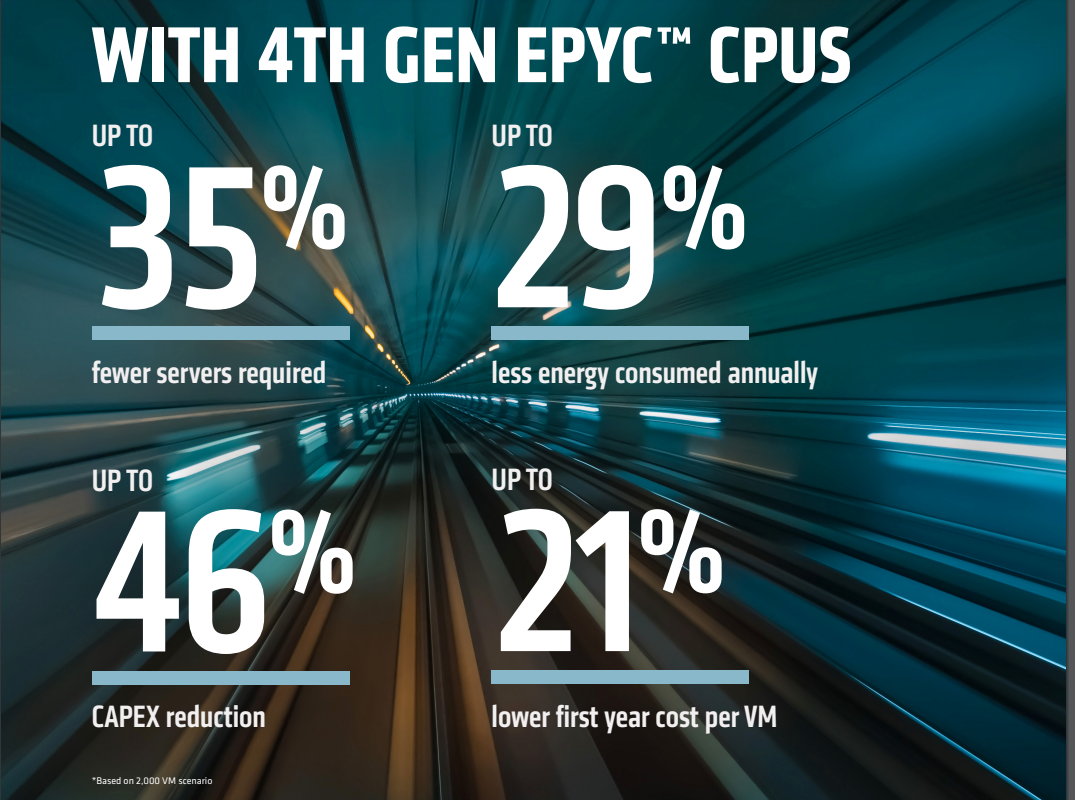

How about "green" computing? Is this an important issue for your customers now?

Yes, more and more we’re seeing customers ask for this green computing approach. Typically, a customer has a thermal budget and a power-price budget. They may say, “In five years, the expenses paid for power should not exceed a certain limit.”

In Europe, we also have a supply-chain discussion. Vendors must increasingly provide proof that they’re taking care in their supply chain with issues including child labor and working conditions. This is almost mandatory, especially in government calls. If you’re unable to answer these questions, you’re out of the bid.

With green computing, we see that the power needed for CPUs and GPUs is going up and up. Five years ago, the maximum a CPU could burn was 200W, but now even 400W might not be enough. Some GPUs are as high as 700W, and there are super-chips beyond even that.

All this makes it difficult to use air-cooled systems. Customers can use air conditioning to a certain extent, but there’s only so much air you can press through the rack. Then you need either on-chip water cooling or some kind of immersion cooling. This can help in two dimensions: saving energy and getting density — you can put the components closer together, and you don’t need the big heat sink anymore.

One issue now is that each vendor offers a different cooling infrastructure. Some of our customers run multi-vendor data centers, so this could create a compatibility issue. That’s one reason we’re looking into immersion cooling. We think we could do some of our first customer implementations in 2024.

Looking ahead, what do you see as a big challenge?

One area is that we want to help customers get easier access to their HPC clusters. That’s done on the software side.

In contrast to classic HPC users, machine learning and AI engineers are not that interested in Linux stuff, compiler options or any other infrastructure details. Instead, they’d like to work on their frameworks. The challenge is getting them to their work as easily as possible—so that they can just log in, and they’re in their development environment. That way, they won’t have to care about what sort of operating system is underneath or what kind of scheduler, etc., is running.