There are times when a standard-sized server just won’t do. Maybe your customer’s branch office or retail store has space constraints. Maybe they have concerns over portability. Or maybe their sustainability goals demand a solution that requires low power and efficient cooling.

For these and other related situations, short-depth servers can fit the bill. These relatively diminutive boxes are designed for use in less-than-ideal physical spaces that nevertheless demand high-performance IT infrastructure.

What kinds of organizations could benefit from short-depth server? Consider your local retail store. It’s likely been laid out using a calculus that prioritizes profit per square inch. This means the store’s best spots are dedicated to attracting buyers and generating revenue.

While that’s smart in terms of retail finance, it may not leave much room for vital infrastructure. That includes the servers that power the store’s point of sale (POS), security, advertising and data-collection systems.

This is a case where short-depth servers can help. These systems provide high levels of compute, storage and networking—without needing tall data center racks, elaborate cooling systems or other supporting infrastructure.

Other good candidates for using short-depth servers include remote branch offices, telco edge installations and industrial environments. In other words, any location that needs enterprise-level servers, but is short on space.

Small but Mighty

What’s more, today’s short-depth servers can handle some serious workloads.

Consider, for instance, the Supermicro WIO A+ Server (AS -1115SV-WTNRT), powered by AMD EPYC 8004 series processors. This short-depth server is engineered to tackle a variety of workloads, including virtualization, firewall applications, database, storage, edge and cloud computing.

The WIO A+ ships as a 1U form factor with a depth of just 23.5 inches. Compared with one of Supermicro’s big 8U multi-GPU servers, which has a depth of more than 33 inches, the short-depth server is short indeed.

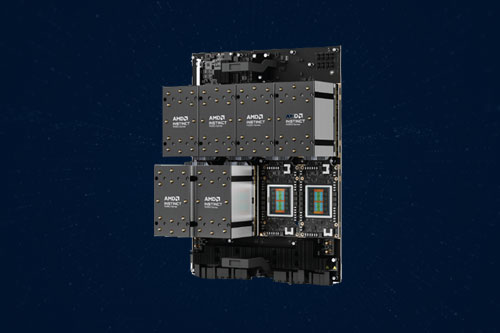

Yet despite its diminutive size, this Supermicro server is packed with a ton of power—and room to grow. A single AMD EPYC processor sits at the center of the action, aided by either one double-width or two single-width GPUs.

This server also has room for up to 768GB of ECC DDR5 memory. And it can accommodate up to 10 hot-swap drives for NVMe, SAS or SATA storage.

As if that weren’t enough, Supermicro also includes room in this server cabinet for two PCIe 5.0 x16 full-height, full-length (FHFL) expansion cards. There’s also space for a single PCIe 5.0 x16 low-profile (LP) card.

More Power for Smaller Space

Fitting enough tech into a short-depth server can be a challenge. To do this, Supermicro’s designers had a few tricks up their sleeves.

For one, they used a custom motherboard instead of the more common ATX or EEB types. This creates more space in the smaller chassis. It also lets the designers employ a high-density component layout. The processors, GPUs, drives and other elements are placed closer to each other than they could be in a standard server.

Supermicro’s designers also deployed low-profile heat sinks. These use pipes that direct the heat toward fans. To save space, the fans are smaller than usual, but make up the difference by running faster. Sure, faster fans can create more noise. But it’s a worthy trade-off to avoid system failure due to overheating.

Are there downsides to the smaller form factor? There can be. For one, constrained airflow could force a system to throttle both processor and GPU performance in an effort to prevent heat-related issues. This could be an issue when running highly resource-intensive VM workloads.

For another, the smaller power supply units (PSUs) used in many short-depth servers may necessitate a less-powerful configuration than a user might prefer. For example, Supermicro’s short-depth server includes two 860-watt power supplies. That’s far less available power than the company’s multi-GPU powerhouse, which comes with six 5,250-watt PSUs. Of course, from another perspective, the need for less power can be seen as a benefit, especially at remote edge locations.

Short-depth servers represent a useful trade-off. While they give up some power and expandability, their reduced sizes can help IT pros make the most of tight spaces.

Do More:

- Check out Supermicro’s short-depth server powered by AMD processors

- Download the data sheet: Supermicro WIO A+ server AS -1115SV-WTNRT