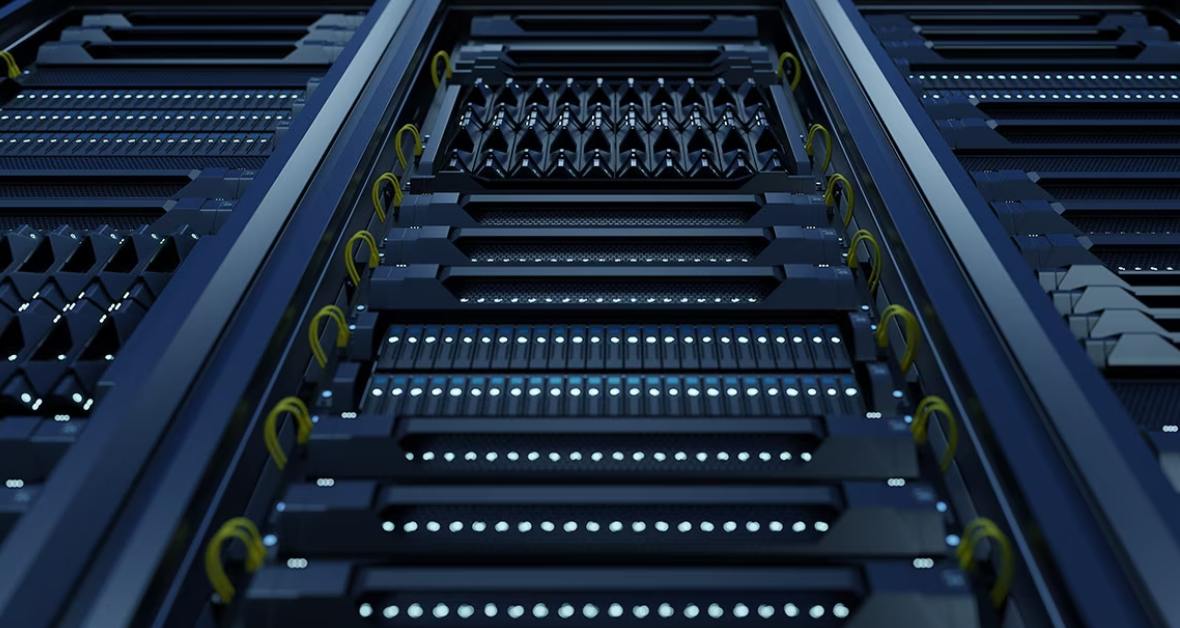

This week AMD strengthened its already strong commitment to the cloud and enterprise markets. The company announced several new products and partnerships at its Data Center and AI Technology Premier event, which was held in San Francisco and simultaneously broadcast online.

“We’re focused on pushing the envelope in high-performance and adaptive computing,” AMD CEO Lisa Su told the audience, “creating solutions to the world’s most important challenges.”

Here’s what’s new:

Bergamo: That’s the former codename for the new 4th gen AMD EPYC 97X4 processors. AMD’s first processor designed specifically for cloud-native workloads, it packs up to 128 cores per socket using AMD’s new Zen 4c design to deliver lots of power/watt. Each socket contains 8 chiplets, each with up to 16 Zen 4c cores; that’s twice as many cores as AMD’s earlier Genoa processors (yet the two lines are compatible). The entire lineup is available now.

Genoa-X: Another codename, this one is for AMD’s new generation of AMD 3D V-Cache technology. This new product, designed specifically for technical computing such as engineering simulation, now supports over 1GB of L3 cache on a 96-core CPU. It’s paired with the new 4th gen AMD EPYC processor, including the high-performing Zen4 core, to deliver high performance/core.

“A larger cache feeds the CPU faster with complex data sets, and enables a new dimension of processor and workload optimization,” said Dan McNamara, an AMD senior VP and GM of its server business.

In all, there are 4 new Genoa-X SKUs, ranging from 16 to 96 cores, and all socket-compatible with AMD’s Genoa processors.

Genoa: Technically, not new, as this family of data-center CPUs was introduced last November. But what is new is AMD’s new focus for the processors on AI, data-center consolidation and energy efficiency.

AMD Instinct: Though AMD had already introduced its Instinct MI300 Series accelerator family, the company is now revealing more details.

This includes the introduction of the AMD Instinct MI300X, an advanced accelerator for generative AI based on AMD’s CDNA 3 accelerator architecture. It will support up to 192GB of HBM3 memory to provide the compute and memory efficiency needed for large language model (LLM) training and inference for generative AI workloads.

AMD also introduced the AMD Instinct Platform, which brings together eight MI300X accelerators into an industry-standard design for the ultimate solution for AI inference and training. The MI300X is sampling to key customers starting in Q3.

Finally, AMD also announced that the AMD Instinct MI300A, an APU accelerator for HPC and AI workloads, is now sampling to customers.

Partner news: Mark your calendar for June 20. That’s when Supermicro plans to explore key features and use cases for its Supermicro 13 systems based on AMD EPYC 9004 series processors. These Supermicro systems will feature AMD’s new Zen 4c architecture and 3D V-Cache tech.

This week Supermicro announced that its entire line of H13 AMD-based systems are now available with support for the 4th gen AMD EPYC processors with Zen 4c architecture and V-Cache technology.

That includes Supermicro’s new 1U and 2U Hyper-U servers designed for cloud-native workloads. Both are equipped with a single AMD EPYC processor with up to 128 cores.

Do more:

- Learn more about 4th Gen AMD EPYC processors

- Meet the new Supermicro Hyper-U servers