The market for high performance computing (HPC) is changing, meaning system integrators that serve HPC customers need to change too.

To learn more, PIC managing editor Peter Krass spoke recently with Oliver Tennert, NEC Germany’s director of HPC marketing and post-sales. NEC Germany works with hardware vendors that include AMD processors and Supermicro servers. This interview has been lightly edited for clarity.

First, please tell me about NEC Germany and its relationship with parent company NEC Corp.?

I work for NEC Germany, which is a subsidary of NEC Europe. Our parent company, NEC Corp., is a Japanese company with a focus on telecommunications, which is still a major part of our business. Today NEC has about 100,000 employees around the world.

HPC as a business within NEC is done primarily by NEC Germany and our counterparts at NEC Corp. in Japan. The Japanese operation covers HPC in Asia, and we cover EMEA, mainly Europe.

What kinds of HPC workloads and applications do your customers run?

It’s probably 60:40 — that is, about 60% of our customers are in academia, including universities, research facilities, and even DWD, Germany’s weather-forecasting service. The remaining 40% are industrial, including automotive and engineering companies.

The typical HPC use cases of our customers come in two categories. The most important HPC category of course is simulation. That can mean simulating physical processes. For example, what does a car crash look like under certain parameters? These simulations are done in great detail.

Our other important HPC category is data analytics. For example, that could mean genomic analysis.

How do you work with AMD and Supermicro?

To understand this, you first have to understand how NEC’s HPC business works. For us, there are two aspects to the business.

One, we’ve got our own vector technology. Our NEC vector engine is a PCIe card designed and produced in Japan. The latest incarnation of our vector supercomputer is the NEC SX-Aurora TSUBASA. It was designed to run applications that are both vectorizable and profit from high bandwidth to main memory. One of our big customers in this area is the German weather service, DWD.

The other part of the business is what we call “pizza boxes,” the x86 architecture. For this, we need industry-standard servers, including processors from AMD and servers from Supermicro.

For that second part of the business, what is NEC’s role?

The answer has to do with how the HPC business works operationally. If a customer intends to purchase a new HPC cluster, typically they need expert advice on designing an optimized HPC environment. What they do know is the application they run. And what they want to know is, ‘How do we get the best, most optimized system for this application?’

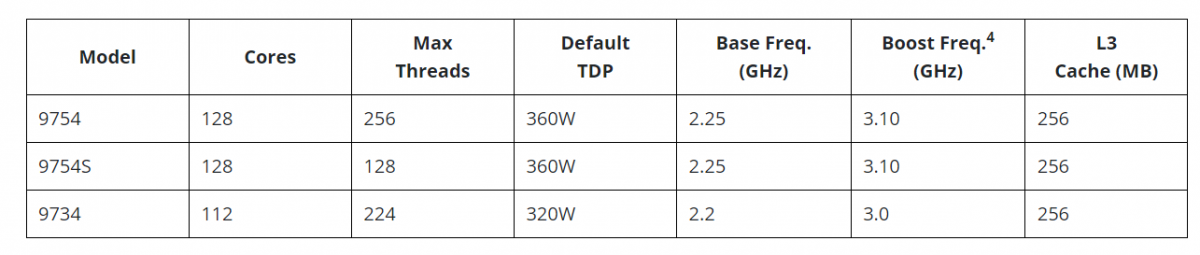

This implies doing a lot of configuration. Essentially, we optimize the design based on many different components. Even if we know that an AMD processor is the best for a particular task, still, there are dozens of combinations of processor SKUs and server model types which offer different price/performance ratios. The same applies to certain data-storage solutions. For HPC, storage is more than just picking an SSD. What’s needed is a completely different kind of technology.

Configuring and setting up such a complex solution takes a lot of expertise. We’re being asked to run benchmarks. That means the customer says, ‘Here’s my application, please run it on some specific configurations, and tell me which one offers the best price/performance ratio.’ This takes a lot of time and resources. For example, you need the systems on hand to just try it out. And the complete tender process—from pre-sales discussions to actual ordering and delivery—can take anywhere from weeks to months.

And this is just to bid, right? After all this work, you still might not get the order?

Yes, that can happen. There are lots of factors that influence your chances. In general, if you have a good working relationship with a private customer, it’s easier. They have more discretion than academic or public customers. For public bids, everything must be more transparent, because it’s more strictly regulated. Normally, that means you have more work, because you have to test more setups. Your competition will be doing the same.

When working with the second group, the private industry customers, do customer specify parts from specific vendors, such as AMD and Supermicro?

It depends on the factors that will influence the customer’s final selection. Price and performance, that’s one thing. Power consumption is another. Then, sometimes, it’s the vendors. Also, certain projects are more attractive to certain vendors because of market visibility—so-called lighthouse projects. That can have an influence on the conditions we get from vendors. Vendors also honor the amount of effort we have put in to getting the customer in the first place. So there are all sorts of external factors that can influence the final system design.

Also, today, the majority of HPC solutions are similar from an architectural point of view. So the difference between competing vendors is to take all the standard components and optimize from these, instead of providing a competing architecture. As a result, the soft skills—such as the ability to implement HPC solutions in an efficient and professional way—also have a large influence on the final order.

How about power consumption and cooling? Are these important considerations for your HPC customers?

It’s become absolutely vital. As a rule of thumb, we can say that the larger an HPC project is going to be, the more likely that it is going to be cooled by liquid.

In the past, you had a server room that you cooled with air conditioning. But those times are nearly gone. Today, when you think of a larger HPC installation—say, 1,000 or 2,000 nodes—you’re talking about a megawatt of power being consumed, or even more. And that also needs to be cooled.

The challenge in cooling a large environment is to get the heat away from the server and out of the room to somewhere else, whether outside or to a larger cooling system. This cannot be done by traditional cooling with air. Air is too inefficient for transporting heat. Water is much better. It’s a more efficient means for moving heat from Point A to Point B.

How are you cooling HPC systems with liquid?

There are a few ways to do this. There’s cold-water cooling, mainly indirect. You bring in water with what’s known as an “inlet temperature” of about 10 C and it cools down the air inside the server racks, with the heat getting carried away with the water now at about 15 or 20 C. The issue is, first you need energy just to cool the water down to 10 C. Also, there’s not much you can do with water at 15 or 20 C. It’s too warm for cooling anything else, but too cool for heating a room.

That’s why the new approach is to use hot-water cooling, mainly direct. It sounds like a paradox. But what might seem hot to a human being is in fact pretty cool for a CPU. For a CPU, an ambient temperature of 50 or 60 C is fine; it would be absolutely not fine for a human being. So if you have an inlet temperature for water of, say, 40 or 45 C, that will cool the CPU, which runs at an internal temperature of 80 or 90 C. The outbound temperature of the water is then maybe 50 C. Then it becomes interesting. At that temperature, you can heat a building. You can reuse the heat, rather than just throwing it away. So this kind of infrastructure is becoming more important and more interesting.

Looking ahead, what are some of your top projects for the future?

Public customers such as research universities have to replace their HPC systems every three to five years. That’s the normal cycle. In that time the hardware becomes obsolete, especially as the vendors optimize their power consumption to performance ratio more and more. So it’s a steady flow of new projects. For our industrial customers, the same applies, though the procurement cycle may vary.

We’re also starting to see the use of computational HPC capacity from the cloud. Normally, when people think of cloud, they think of public clouds from Amazon, Microsoft, etc. But for HPC, there are interim approaches as well. A decade ago, there was the idea of a dedicated public cloud. Essentially, this meant a dedicated capacity that was for the customer’s exclusive use, but was owned by someone other than the customer. Now, between the dedicated cloud and public cloud, there are all these shades of grey. In the past two years, we’ve implemented several larger installations of this “grey-shaded” cloud approach. So more and more, we’re entering the service-oriented market.

There is a larger trend away from customers wanting to own a system, and toward customers just wanting to utilize capacity. For vendors with expertise in HPC, they have to change as well. Which means a change in the business and the way they have to work with customers. It boils down to, Who owns the hardware? And what does the customer buy, hardware or just services? That doesn’t make you a public-cloud provider. It just means you take over responsibility for this particular customer environment. You have a different business model, contract type, and set of responsibilities.