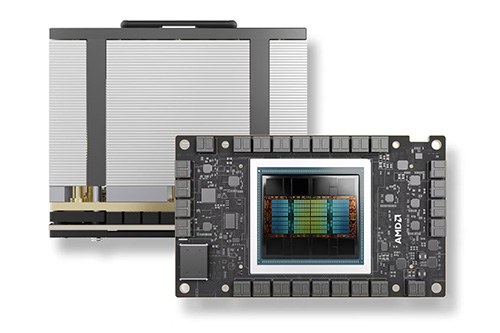

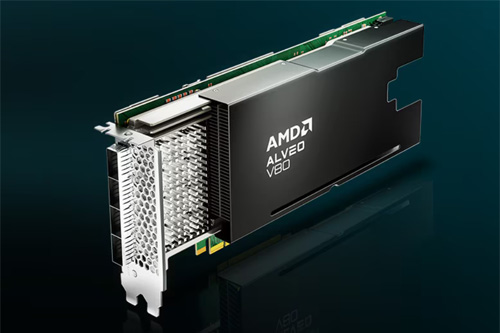

New benchmarks using the AMD Instinct MI300X Accelerator show impressive performance that surpasses the competition.

This is great news for customers operating demanding AI workloads, especially those underpinned by large language models (LLMs) that require super-low latency.

Initial platform tests using MLPerf Inference v4.1 measured AMD’s flagship accelerator against the Llama 2 70B benchmark. This test is an indication for real-world applications, including natural language processing (NLP) and large-scale inferencing.

MLPerf is the industry’s leading benchmarking suite for measuring the performance of machine learning and AI workloads from domains that include vision, speech and NLP. It offers a set of open-source AI benchmarks, including rigorous tests focused on Generative AI and LLMs.

Gaining high marks from the MLPerf Inference benchmarking suite represents a significant milestone for AMD. It positions the AMD Instinct MI300X accelerator as a go-to solution for enterprise-level AI workloads.

Superior Instincts

The results of the LLaMA2-70B test are particularly significant. That’s due to the benchmark’s ability to produce an apples-to-apples comparison of competitive solutions.

In this benchmark, the AMD Instinct MI300X was compared with NVIDIA’s H100 Tensor Core GPU. The test concluded that AMD’s full-stack inference platform was better than the H100 at achieving high-performance LLMs, a workload that requires both robust parallel computing and a well-optimized software stack.

The testing also showed that because the AMD Instinct MI300X offers the largest GPU memory available—192GB of HBM3 memory—it was able to fit the entire LLaMA2-70B model into memory. Doing so helped to avoid network overhead by preventing model splitting. This, in turn, maximized inference throughput, producing superior results.

Software also played a big part in the success of the AMD Instinct series. The AMD ROCm software platform accompanies the AMD Instinct MI300X. This open software stack includes programming models, tools, compilers, libraries and runtimes for AI solution development on the AMD Instinct MI300 accelerator series and other AMD GPUs.

The testing showed that the scaling efficiency from a single AMD Instinct MI300X, combined with the ROCm software stack, to a complement of eight AMD Instinct accelerators was nearly linear. In other words, the system’s performance improved proportionally by adding more GPUs.

That test demonstrated the AMD Instinct MI300X’s ability to handle the largest MLPerf inference models to date, containing over 70 billion parameters.

Thinking Inside the Box

Benchmarking the AMD Instinct MI300X required AMD to create a complete hardware platform capable of addressing strenuous AI workloads. For this task, AMD engineers chose as their testbed the Supermicro AS -8125GS-TNMR2, a massive 8U complete system.

Supermicro’s GPU A+ Client Systems are designed for both versatility and redundancy. Designers can outfit the system with an impressive array of hardware, starting with two AMD EPYC 9004-series processors and up to 6TB of ECC DDR5 main memory.

Because AI workloads consume massive amounts of storage, Supermicro has also outfitted this 8U server with 12 front hot-swap 2.5-inch NVMe drive bays. There’s also the option to add four more drives via an additional storage controller.

The Supermicro AS -8125GS-TNMR2 also includes room for two hot-swap 2.5-inch SATA bays and two M.2 drives, each with a capacity of up to 3.84TB.

Power for all those components is delivered courtesy of six 3,000-watt redundant titanium-level power supplies.

Coming Soon: Even More AI power

AMD engineers continually push the limits of silicon and human ingenuity to expand the capabilities of their hardware. So it should come as little surprise that new iterations of the AMD Instinct series are expected to be released in the coming months. This past May, AMD officials said they plan to introduce AMD Instinct MI325, MI350 and MI400 accelerators.

Forthcoming Instinct accelerators, AMD says, will deliver advances including additional memory, support for lower-precision data types, and increased compute power.

New features are also coming to the AMD ROCm software stack. Those changes should include software enhancements including kernel improvements and advanced quantization support.

Are you customers looking for a high-powered, low-latency system to run their most demanding HPC and AI workloads? Tell them about these benchmarks and the AMD Instinct MI300X accelerators.

Do More:

- Check out the AMD Engineering Insight: Unveiling MLPerf results on AMD Instinct MI300X accelerators

- Dig into the AMD Instinct MI300X accelerators

- Check the specs on Supermicro’s 8U server powered by AMD Instinct MI300X accelerators

- Read a product brief: Supermicro and AMD deliver rack scale AI and HPC solutions with the new AMD Instinct MI300 series accelerators