Healthcare providers face some tough challenges. Advanced technology can help.

As a recent report from consultants McKinsey & Co. points out, healthcare providers are dealing with some big challenges. These include rising costs, workforce shortages, an aging population, and increased competition from nontraditional parties.

Another challenge: Consumers expect their healthcare providers to offer new capabilities, such as digital scheduling and telemedicine, as well as better experiences.

One way healthcare providers hope to meet these two challenge streams is with advanced technology. Three-quarters of U.S. healthcare providers increased their IT spending in the last year, according to a survey conducted by consultants Bain & Co. The same survey found that 15% of healthcare providers already have an AI strategy in place, up from just 5% who had a strategy in 2023.

Generative AI is showing potential, too. Another survey, this one done by McKinsey, finds that over 70% of healthcare organizations are now either pursuing GenAI proofs-of-concept or are already implementing GenAI solutions.

Dynamic Duo

There’s a catch to all this: As healthcare providers adopt AI, they’re finding that the required datasets and advanced analytics don’t run well on their legacy IT systems.

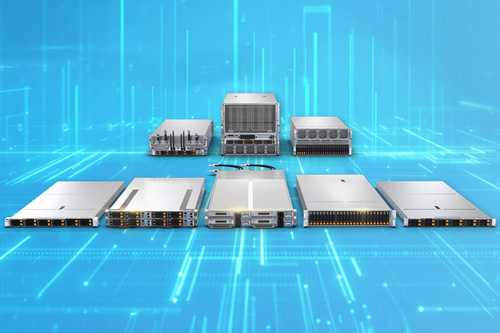

To help, Supermicro and AMD are working together. They’re offering healthcare providers heavy-duty compute delivered at rack scale.

Supermicro servers powered by AMD Instinct MI300X GPUs are designed to accelerate AI and HPC workloads in healthcare. They offer the levels of performance, density and efficiency healthcare providers need to improve patient outcomes.

The AMD Instinct MI300X is designed to deliver high performance for GenAI workloads and HPC applications. It’s designed with no fewer than 304 high-throughput compute units. You also get AI-specific functions and 192GB of HBM3 memory, all of it based on AMD’s CDNA 3 architecture.

Healthcare providers can use Supermicro servers powered by AMD GPUs for next-generation research and treatments. These could include advanced drug discovery, enhanced diagnostics and imaging, risk assessments and personal care, and increased patient support with self-service tools and real-time edge analytics.

Supermicro points out that its servers powered by AMD Instinct GPUs deliver massive compute with rack-scale flexibility, as well as high levels of power efficiency.

Performance:

- The powerful combination of CPUs, GPUs and HBM3 memory accelerates HPC and AI workloads.

- HBM3 memory offers capacities of up to 192GB dedicated to the GPUs.

- Complete solutions ship pre-validated, ready for instant deployment.

- Double-precision power can serve up to 163.4 TFLOPS.

Flexibility:

- Proven AI building-block architecture streamlines deployment at scale for the largest AI models.

- An open AI ecosystem with AMD ROCm open software.

- A unified computing platform with AMD Instinct MI300X plus AMD Infinity fabric and infrastructure.

- Thanks to a modular design and build, users move faster to the correct configuration.

Efficiency:

- Dual-zone cooling innovation, used by some of the most efficient supercomputers on the Green500 supercomputer list.

- Improved density with 3rd Gen AMD CDNA, delivering 19,456 stream cores.

- Chip-level power intelligence enables the AMD Instinct MI300X to deliver big power performance.

- Purpose-built silicon design of the 3rd Gen AMD CDNA combines 5nm and 6nm fabrication processes.

Are your healthcare clients looking to unleash the potential of their data? Then tell them about Supermicro systems powered by the AMD MI300X GPUs.

Do More:

- Check out Supermicro solutions for healthcare

- Get tech specs on the AMD Instinct MI300X GPU