AMD and Supermicro are helping Industrial Light & Magic (ILM) create the future of visual movie and TV production.

ILM is the visual-effects company founded by George Lucas in 1975. Today it’s still on the lookout for better, faster tech. And to get it, ILM leans on Supermicro for its rackmount servers and workstations, and AMD for its processors.

The servers help ILM reduce render times. And the workstations enable better collaboration and storage solutions that move data faster and more efficiently.

All that high-tech gear comes together to help ILM create some of the world’s most popular TV series and movies. That includes “Obi-Wan Kenobi,” “Transformers” and “The Book of Boba Fett.”

It’s a huge task. But hey, someone’s got to create all those new universes, right?

Power hungry—and proud of it

No one gobbles up compute power quite like ILM. Sure, it may have all started with George Lucas dropping an automotive spring on a concrete floor to create the sound of the first lightsaber. But these days, it’s all about the 1s and 0s—a lot of them.

An enormous amount of compute power goes into rendering computer-generated imagery (CGI) like special effects and alien characters. So much power, in fact, that it can take weeks or even months to render an entire movie’s worth of eye candy.

Rendering takes not only time, but also money and energy. Those are the three resources that production companies like ILM must ration. They’re under pressure to manage cash flow and keep to tight production schedules.

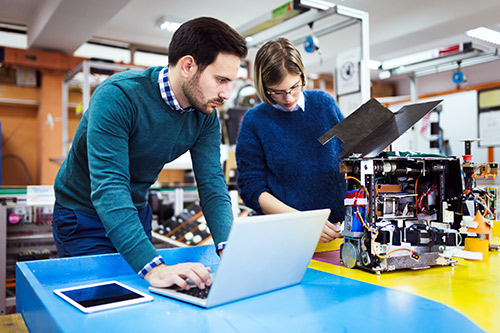

By deploying Supermicro’s high-performance and multinode servers powered by AMD’s EPYC processors , ILM gains high core counts and maximum throughput—two crucial components of faster rendering.

Modern filmmakers are also obliged to manage data. Storing and moving terabytes of rendering and composition information is a constant challenge, especially when you’re trying to do it quickly and securely.

The solution to this problem comes in the form of high-performance storage and networking devices. They can shift vast swaths of information from here to there without bottlenecks, overheating or (worst-case scenario) total failure.

EPYC stories

This is the part of the story where CPUs take back some of the spotlight. GPUs have been stealing the show ever since data scientists discovered that graphic processors are the keys to unlocking the power of AI. But producing the next chapter of the “Star Wars” franchise means playing by different rules.

AMD EPYC processors play a starring role in ILM’s render farms. Render farms are big collections of networked server-class computers that work as a team to crunch a metric ton of data.

A typical ILM render farm might contain dozens of high-performance computers like the Supermicro BigTwin. This dual-node processing behemoth can house two 3rd gen AMD EPYC processors, 4TB of DDR5 memory per node and a dozen 2.5-inch hot-swappable solid-state drives (SSDs). In case the specs don’t speak for themselves, that’s an insane amount of power and storage.

For ILM, lighting and rendering happen inside an application by Isotropix called Clarisse. Our hero, Clarisse, relies on CPU rather than GPU power. Unlike most 3D apps, which are single-threaded, Clarisse also features unusually efficient multi-threading.

This lets the application take advantage of the parallel-processing power in AMD’s EPYC CPUs to complete more tasks simultaneously. The results: shorter production times and lower costs.

Coming soon: StageCraft

ILM is taking its tech show on the road with an end-to-end virtual production solution called StageCraft. It exists as both a series of Los Angeles and Vancouver-based sites—ILM calls them “volumes”—as well as mobile pop-up volumes waiting to happen anywhere in the United States and Europe.

The introduction of StageCraft is interesting for a couple of reasons. For one, this new production environment makes ILM’s AMD-powered magic wand accessible to a wider range of directors, producers and studios.

For another, StageCraft could catalyze the proliferation of cutting-edge creative tech. This, in turn, could lead to the same kind of competition, efficiency increases and miniaturization that made 4K filmmaking a feature of everyone’s mobile phones.

StageCraft could also usher in a new visual language. The more people with access to high-tech visualization technology, the more likely it is that some unknown aspiring auteur will pop up, seemingly out of nowhere, to change the nature of entertainment forever.

Kinda’ like how George Lucas did it back in the day.

Do more:

- Watch a video: ILM & AMD behind the scenes

- Check out Supermicro BigTwin