Supermicro has delivered 900 of its BigTwin® A+ 2124BT-HNTR computers employing AMD EPYC™ processors to the European Organization for Nuclear Research (better known as CERN) to support the organization’s research. The systems are for running batch computing jobs related to physics-event reconstruction, data analysis and simulations. Many of CERN's discoveries have had a powerful effect on aspects of everyday life, in areas such as medicine and computing.

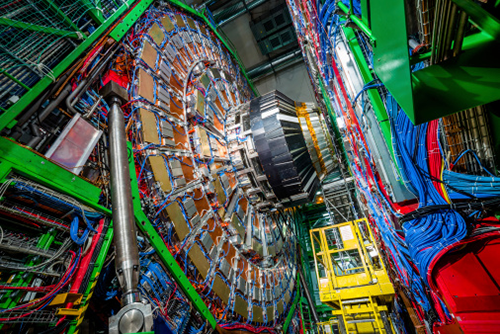

CERN is home to the largest physics project on earth, the Large Hadron Collider (LHC). It can collect data on subatomic particle interactions at the rate of 40TB per second. This means the lab needs high-performance computers to sift through the massive amount of data and find the most relevant interactions that will give scientists the data needed to support the right conclusions.

“It is a messy I/O challenge, and has huge data requirements,” said Niko Neufeld, a project leader for online computing at CERN. Neufeld oversees a project investigating the properties of a quark called the beauty particle. The project is attempting to determine what happened in the nanoseconds just after the Big Bang that created all matter. The data flows are manipulated with custom AMD circuitry that slices up the LHC data into smaller pieces. “You need to get all the data pieces together in a single location because only then can you do a meaningful calculation on this stuff," Neufeld said. The effort entails rapid data processing, high-bandwidth access to lots of memory and very speedy I/O among the many Supermicro servers.

There are at least three reasons that CERN gravitated to its eventual choice of servers and storage systems supplied by Supermicro and AMD. One is that CERN has been an AMD customer through many processor generations. Another is that there was support for 128 PCIe Gen 4 data paths that let networking cards run with minimal bottlenecks. The third reason was the capability of the CPUs and servers to support the 512GB RAM installed on each server, so the servers can collectively keep pace with the data driving at them at 40TB/sec.

“This generation of the AMD EPYC™ processor platform offers an architectural advantage, and there isn’t any current server that offers as much power and slots,” he said. Finally, because of the vast compute power of the Supermicro BigTwins, CERN was able to reduce its overall server count by a third, making the project more energy efficient and providing the space to add more servers should that be needed. “We could eventually double our capacity and occupy the same physical space,” he said. “It gives us a lot of headroom.”