AMD advanced its AI vision at the “Advancing AI” event on June 12. The event, held live in the Silicon Valley city of San Jose, Calif., as well as online, featured presentations by top AMD executives and partners.

As many of the speakers made clear, AMD’s vision for AI is that it be open, developer-friendly, collaborative and useful to all.

AMD certainly believes the market opportunity is huge. During the day’s keynote, CEO Lisa Su said AMD now believes the total addressable market (TAM) for data-center AI will exceed $500 billion by as soon as 2028.

And that’s not all. Su also said she expects AI to move beyond the data center, finding new uses in edge computers, PCs, smartphone and other devices.

To deliver on this vision, Su explained, AMD is taking a three-pronged approach to AI:

- Offer a broad portfolio of compute solutions.

- Invest in an open development ecosystem.

- Deliver full-stack solutions via investments and acquisitions.

The event, lasting over two hours, was also filled with announcements. Here are the highlights.

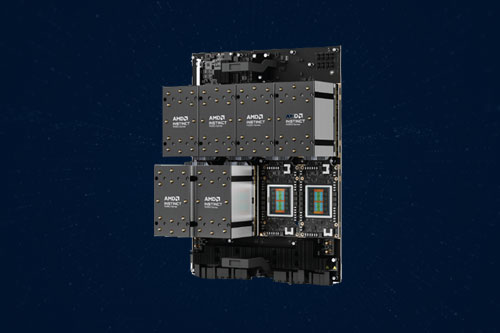

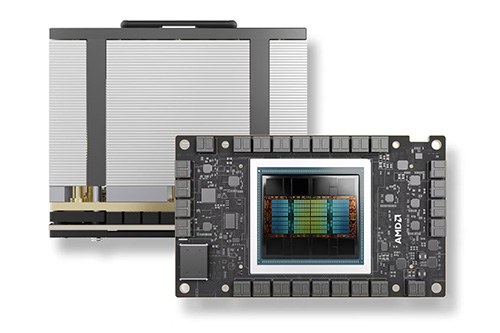

New: AMD Instinct MI350 Series

At the Advancing AI event, CEO Su formally announced the company’s AMD Instinct MI350 Series GPUs.

There are two models, the MI350X and MI355X. Though both are based on the same silicon, the MI355X supports higher thermals.

These GPUs, Su explained, are based on AMD’s 4th gen Instinct architecture, and each GPU comprises 10 chiplets containing a total of 185 billion transistors. The new Instinct solutions can be used for both AI training and AI inference, and they can also be configured in either liquid- or air-cooled systems.

Su said the MI355X delivers a massive 35x general increase in AI performance over the previous-generation Instinct MI300. For AI training, the Instinct MI355X offers up to 3x more throughput than the Instinct MI300. And in comparison with a leading competitive GPU, the new AMD GPU can create up to 40% more tokens per dollar.

AMD’s event also featured several representatives of companies already using AMD Instinct MI300 GPUs. They included Microsoft, Meta and Oracle.

Introducing ROCm 7 and AMD Developer Cloud

Vamsi Boppana, AMD’s senior VP of AI, announced ROCm 7, the latest version of AMD’s open-source AI software stack. ROCm 7 features improved support for industry-standard frameworks; expanded hardware compatibility; and new development tools, drivers, APIs and libraries to accelerate AI development and deployment.

Earlier in the day, CEO Su said AMD’s software efforts “are all about the developer experience.” To that end, Boppana introduced the AMD Developer Cloud, a new service designed for rapid, high-performance AI development.

He also said AMD is giving developers a 25-hour credit on the Developer Cloud with “no strings.” The new AMD Developer Cloud is generally available now.

Road Map: Instinct MI400, Helios rack, Venice CPU, Vulcano NIC

During the last segment of the AMD event, Su gave attendees a sneak peek at several forthcoming products:

- Instinct MI400 Series: This GPU is being designed for both large-scale AI inference and training. It will be the heart of the Helios rack solution (see below) and provide what Su described as “the engine for the next generation of AI.” Expect performance of up to 40 petaflops, 432GB of HBM4 memory, and bandwidth of 19.6TB/sec.

- Helios: The code name for a unified AI rack solution coming in 2026. As Su explained it, Helios will be a rack configuration that functions like a single AI engine, incorporating AMD’s EPYC CPU, Instinct GPU, Pensando Pollara network interface card (NIC) and ROCm software. Specs include up to 72 GPUs in a rack and 31TB of HBM3 memory.

- Venice: This is the code name for the next generation of AMD EPYC server CPUs, Su said. They’ll be based on a 2nm form, feature up to 256 cores, and offer a 1.7x performance boost over the current generation.

- Vulcano: A future NIC, it will be built using a 3nm form and feature speeds of up to 800Gb/sec.

Do More:

- Meet the AMD Instinct MI350 Series GPUs

- Get more details on ROCm 7 and the AMD Developer Cloud

- Watch a YouTube replay of the AMD Advancing AI event